VAILL's AI Augmented Legal Research Class Write-Up: Students Achieve Comparable Results In Less Time Using AI

Midpage ranked the third most popular tool for experimenting behind ChatGPT and Claude, and ahead of Lexis's Protégé and Westlaw's CoCounsel.

Emily Pavuluri, an instructor and law librarian at Vanderbilt’s AI Law Lab, recently published insights from her Spring 2025 AI Augmented Legal Research class.

Her excellent write-up focused on the class capstone project, which simulated a real-world legal research assignment.

The SetUp

Each student was given a unique legal research assignment. There were two phases, and students tracked their time throughout both.

Phase 1: No AI. They had a weekend to tackle their preliminary research in preparation for a “partner meeting” early the next week. For this phase, they were allowed to use only traditional methods without any AI features or tools.

Phase 2: All the AI. After their partner meeting, students were free to use any AI tools at their disposal to research the same question again. This time, they would go on to present their findings to their classmates as if it were a law firm’s AI task force meeting: What was their use case? What worked? What didn’t?

By forcing students to become familiar with their legal research issue before using AI, it made it easier to verify and cross-reference their AI research against the ground-truth they had already established. This familiarity made them better users—and better critics—of the tools they are encouraged to experiment with and evaluate.

The Takeaways

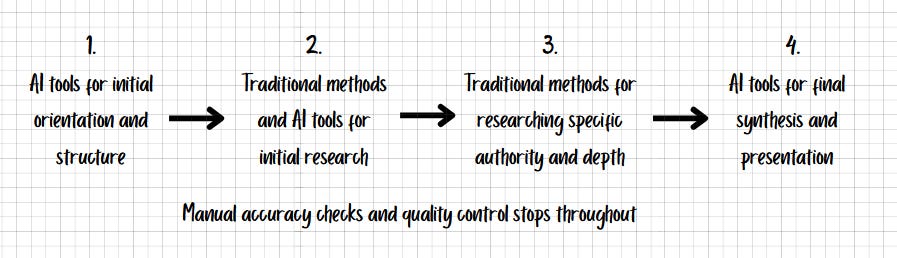

A Hybrid Approach: The “overwhelming consensus (30 of 32 students) favored a hybrid approach of combining AI and traditional research methods.” This involved utilizing AI for brainstorming, sense-checking, and orientation, followed by a combination of traditional and AI tools for a deeper dive.

At midpage, we made the bet long ago that any AI-powered legal research tool worth its salt needed to incorporate the basic (i.e., “traditional”) workflows: searching, reading cases, and checking their treatment history. Law students at Vanderbilt seem to underscore that this workflow is here to stay.

Popularity: The most popular AI tools for experimentation were ChatGPT (22 mentions), Claude (16 mentions), midpage.ai (14 mentions), then Lexis AI/Protégé (11 mentions) and Westlaw’s AI-Assisted Research/CoCounsel (9 mentions).

We’re taking two things from this data point: First, our core features (search, AI filtering, a citator, and our research agent) resonate with users. Second, there is a real eagerness to try something other than Westlaw and Lexis products.Efficiency: Another stat that jumps off the page and speaks for itself: 28 of 32 students felt “they could achieve the same or comparable results as traditional research with their hybrid approach but in much less time.”

Limitations: The write-up included a list of the most significant limitations identified by the students, which included a desire for more in-depth analysis, improved citations, and a lingering concern about accuracy and potential for hallucinations. Even using tools designed specifically for legal research, students felt it was important to double-check outputs. This is a good thing.

Conclusion

Hats off to Emily and her students for putting these tools to the test. The bottom line is that students are fully capable of knowing when and how to use these tools when they are given the freedom to experiment.

Read the full article below!